Converting Music in Audio Representation to Symbolic/Visual Representation by Yuval M.

Presentation

Summary

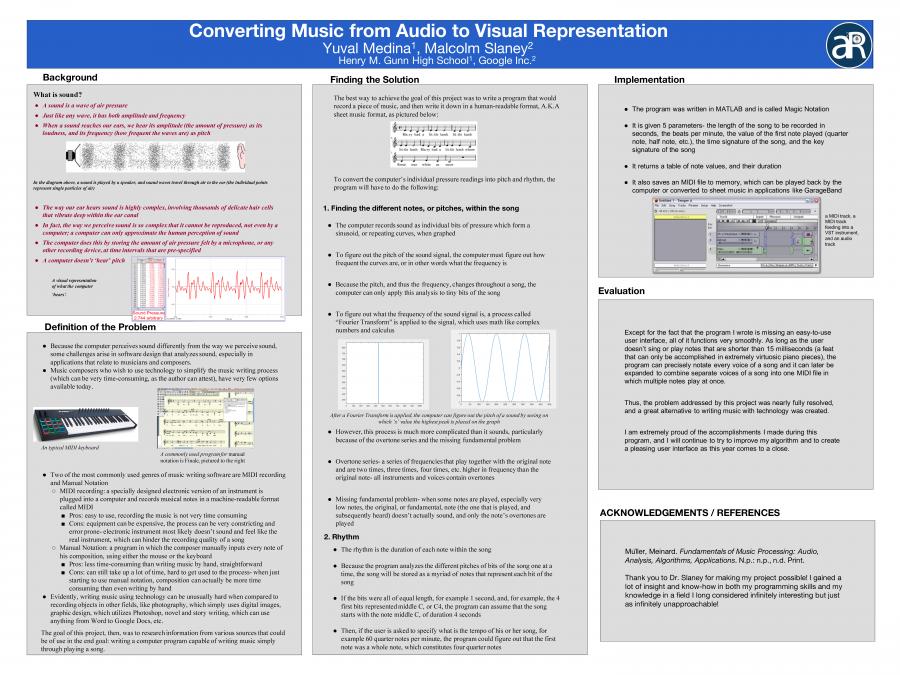

What is the series of procedures needed to analyze music recorded and stored in an audio format and yield a machine- and human-readable symbolic representation of the music, and how could these procedures be implemented in computer code (an app)? Music, much like other mediums of expression and communication needs an intelligent and utilizable way to be stored, read, and written by the technology that is present today. This will insure that it can be as accessible as possible. Music can be represented in three ways; sheet music, a visual representation, much like a PDF for a book, an audio representation, e.g. music in MP3 format, and a symbolic representation, like the MIDI format which most resembles to a machine what a piece of music is to a musician, but itself is practically not readable by the musician (Müller, Fundamentals of Music Processing). Only one of these formats is readable by a person: sheet music. Another can be heard: the audio representation. Today, there is virtually no way to convert music in an audio format to written music that a musician can access. The odd situation that exists with music here is as if authors had to handwrite their books, scan them as images, and people only had access to those scanned images to read on a computer. There isn’t yet a program for musicians akin to what “Word” or “Google Docs” is to an author. In addition to this, many composers who are unacquainted with musical symbols and conventions cannot use notation programs such as MuseScore or Finale and write down their music, note by note. Even though they absolutely can perform their works and share them with others, it is regrettably only outside the realm of technology that they can do so, which is unacceptable considering how many advancements have been made in making other forms of information easy to use and create through technology, including images, visual art, literary works, etc. It is imperative that composers should be able to simply record their pieces to be able to share it as sheet music, and will be the main concern of this project and the problem to be solved by the app. Primary literature that would be used to address this problem includes Fundamentals of Music Processing, by Meinard Müller. The book concisely describes all aspects of how to deal with music and sound in the context of computer programming. Topics range from how music can be represented as data in a computer, to mathematically analyzing sound waves, to harmony and inharmony and music theory. The book is the best resource for achieving the goals of this research project, but the project would not be limited to it alone. As the project progresses, more and more books, research papers, and academic journals from the professional community will be used...The timeline of this project will consist of two distinct phases of strictly researching the problem, and one final phase of implementing the principles being researched in an app. The first phase consists of researching methods for analyzing audio and making sense of sound waves, such as the Fourier Transform, in books such as Fundamentals of Music Processing. These mathematical concepts will be evaluated and noted, for the purposes of later converting audio to MIDI in the app. This first phase is estimated to take approximately a month to complete, from December 3rd to January 15th. Following this, a methodology will be created to convert these known frequencies from an MIDI format to a human-readable format, to sheet music. This will consist of brainstorming solutions to mainly logic operations that will later be implemented in the app. This time will also be used to research methods on how to carry out experiments and iterations of this logic in the following phase of the project. This second phase of this project will take less than a month, from about January 16th to February 5th. The last phase of this project will include writing the app that will implement what was researched in the previous two phases of the project and continuously iterating and improving its logic, using machine learning and sample recordings. This part of the project will last from about February 6th to anywhere from March 20th to March 31st. The project will eventually culminate in the creation of the app, which will be used to record a piece of music, one musical voice at a time, and convert it into sheet music. The investigative and experimental findings of this project will be published on April, and the app showcased on May.